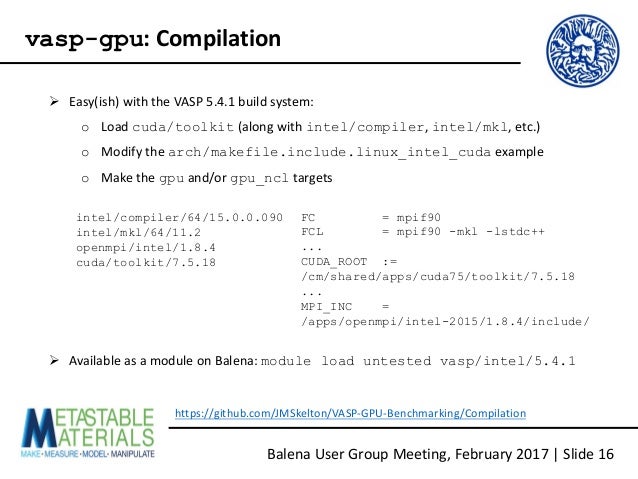

There is even GPU capable versions. Those need a slightly older Intel compiler versions loaded (CUDA 8 does not support Intel 2017), load unload the intel module that vasp/5.4.4 load and load the intel 2016.2. The GPU versions are built using intel 2016.2 and CUDA 8. For the older versions, before 5.4.x: to get access to VASP. VASP 5.4.4 Patch 16052018 with Wannier90 2.1.0. Nsc1-intel-2018a: VASP built for use together with Wannier90 2.1.0. Load and launch e.g. With: module add VASP/5.4.4.16052018-wannier90-nsc1-intel-2018a-eb mpprun vaspstd VASP 5.4.4 Patch 16052018 utility installation with VTST, VASPsol and BEEF. Features that will only be available in VASP.6.X. How to Install VASP: First install VASP. Workshops: The collection of workshops is a good place for the introduction to the basics of VASP. Lectures: The collection of lectures is a good place to start as well. Tutorials and Examples. In order to use VASP, set up the software environment via, e.g.: module purge module load vasp/5.4.4. Three distinct executables have been made available: vaspgam is for Gamma-point-only runs typical for large unit cells; vaspstd is for general k-point meshes with collinear spins;.

The Vienna Ab initio Simulation Package (VASP) is a computer program for atomic scale materials modelling, e.g. electronic structure calculations and quantum-mechanical molecular dynamics, from first principles.

VASP computes an approximate solution to the many-body Schrödinger equation, either within density functional theory (DFT), solving the Kohn-Sham equations, or within the Hartree-Fock (HF) approximation, solving the Roothaan equations. Hybrid functionals that mix the Hartree-Fock approach with density functional theory are implemented as well. Furthermore, Green's functions methods (GW quasiparticles, and ACFDT-RPA) and many-body perturbation theory (2nd-order Møller-Plesset) are available in VASP.

In VASP, central quantities, like the one-electron orbitals, the electronic charge density, and the local potential are expressed in plane wave basis sets. The interactions between the electrons and ions are described using norm-conserving or ultrasoft pseudopotentials, or the projector-augmented-wave method.

To determine the electronic groundstate, VASP makes use of efficient iterative matrix diagonalisation techniques, like the residual minimisation method with direct inversion of the iterative subspace (RMM-DIIS) or blocked Davidson algorithms. These are coupled to highly efficient Broyden and Pulay density mixing schemes to speed up the self-consistency cycle.

Using VASP on Cirrus¶

VASP is only available to users who have a valid VASP licence.

If you have a VASP licence and wish to have access to VASP on Cirrusplease contact the Cirrus Helpdesk.

Running parallel VASP jobs¶

VASP can exploit multiple nodes on Cirrus and will generally be run inexclusive mode over more than one node.

To access VASP you should load the vasp module in your job submission scripts:

Once loaded, the executables are called:

- vasp_std - Multiple k-point version

- vasp_gam - GAMMA-point only version

- vasp_ncl - Non-collinear version

All 5.4.* executables include the additional MD algorithms accessed via the MDALGO keyword.

You can access the LDA and PBE pseudopotentials for VASP on Cirrus at:

The following script will run a VASP job using 4 nodes (144 cores).

New VASP6 released

VASP6 was released in beginning of 2020. This means e.g. that VASP5 license holders will need to update their license in order to access VASP6 installations at NSC. If you bought a VASP license 5.4.4, you are most probably covered for version 6.1.X already, check your license details.

The new features are described in the VASP wiki.

First of all, VASP is licensed software, your name needs to be included on a VASP license in order to use NSC's centrally installed VASP binaries. Read more about how we handle licensing of VASP at NSC.

Sky force anniversary crack. Some problems which can be encountered running VASP are described at the end of this page.

How to run: quick start

A minimum batch script for running VASP looks like this:

This script allocates 4 compute nodes with 32 cores each, for a total of 128 cores (or MPI ranks) and runs VASP in parallel using MPI. Note that you should edit the jobname and the account number before submitting.

For best performance, use these settings in the INCAR file for regular DFT calculations:

For hybrid-DFT calculations, use:

POTCAR

PAW potential files, POTCARs, can be found here:

Read more on recommended PAW potentials.

Modules

NSC's VASP binaries have hard-linked paths to their run-time libraries, so you do not need to load a VASP module or set LD_LIBRARY_PATH and such for it work. We mainly provide modules for convenience, as you do not need to remember the full paths to the binaries. Though if you directly run the binary for non-vanilla installations with intel-2018a, you need to set in the job script (see details in section 'Problems' below):

This is not needed for other modules built with e.g. intel-2018b.

There is generally only one module of VASP per released version directly visible in the module system. It is typically the recommended standard installation of VASP without source code modifications. Load the VASP module corresponding to the version you want to use.

Then launch the desired VASP binary with 'mpprun':

Naming scheme and available installations

The VASP installations are found under /software/sse/manual/vasp/version/buildchain/nsc[1,2,..]/. Each nscX directory corresponds to a separate installation. Typically, the higher build with the latest buildchain is the preferred version. They may differ by the compiler flags used, the level of optimization applied, and the number of third-party patches and bug-fixes added on.

Each installation contains three different VASP binaries:

- vasp_std: 'normal' version for bulk system

- vasp_gam: gamma-point only (big supercells or clusters)

- vasp_ncl: for spin-orbit/non-collinear calculations

We recommended using either vasp_std or vasp_gam if possible, in order to decrease the memory usage and increase the numerical stability. Mathematically, the half and gamma versions should give identical results to the full versions, for applicable systems. Sometimes, you can see a disagreement due to the way floating point numerics works in a computer. In such cases, we would be more inclined to believe the gamma/half results, since they preserve symmetries to a higher degree.

Constrained structure relaxation

Binaries for constrained structure relaxation are available for some of the modules. The naming scheme is as follows, e.g.:

Vasp 5.4.4 Mac

- vasp_x_std: relaxation only in x-direction, yz-plane is fixed

- vasp_xy_std: relaxation only in xy-plane, z-direction is fixed

Makefile.include.linux_intel

Note: This naming scheme gives the relaxation direction.

vdW-kernel

The VASP binaries contain a hard-coded path to the vdW_kernel.bindat file located in the /software file system, so that you do not need to generate it from scratch.

VASP 6.1.2

The update 6.1.2 fixes a broken constrained magnetic moment approach in VASP6 (ok in VASP5). Also included (assumed from update 6.1.1) is fix for a bug which affected the non-local part of the forces in noncollinear magnetic calculations with NCORE not set to 1 (ok in VASP5). Shadow of mordor black captains list.

nsc1-intel-2018a: Compiled in the same way as for 6.1.0 (see below).

omp-nsc1-intel-2018a: Compiled for OpenMP threading, otherwise similar to the above installation. Examples on how to best use the OpenMP threading for efficiency will soon be added. In brief, it might e.g. be useful for increasing the efficiency when you have reduced the number of tasks (mpi ranks) per node in order to save memory.

Compare with the example job script from the top of the page, now setting 4 OpenMP threads. Note the reduced tasks per node (mpi ranks) such that 8 tasks x 4 OpenMP threads = 32 (cores/node). Also note the setting of OMP_NUM_THREADS and KMP_STACKSIZE

VASP 6.1.0

When testing VASP6, I needed to set export I_MPI_ADJUST_REDUCE=3 for stable calculations (done automatically at NSC by loading the corresponding VASP modules). Otherwise, calculations might slightly differ or behave differently (ca. 1 out of 10).

nsc1-intel-2018a: The first VASP6 module at NSC. A build with no modifications of the source code and using more conservative optimization options (-O2 -xCORE-AVX2). This build does not include OpenMP. All tests in the new officially provided testsuite passed.

VASP 5.4.4 Patch 16052018

nsc1-intel-2018a: After fixing in the module by automatically setting I_MPI_ADJUST_REDUCE=3 (see discussion in Modules) this is now again the recommended module. VASP built from the original source with minimal modifications using conservative optimization options (-O2 -xCORE-AVX512). The VASP binaries enforce conditional numerical reproducibility at the AVX-512 bit level in Intel's MKL library, which we believe improves numerical stability with no cost to performance.

nsc1-intel-2018b: Built with slightly newer intel compiler. However, it seems more sensitive for certain calculations, leading to crashes, which are avoided by using nsc1-intel-2018a.

nsc2-intel-2018a: Due to spurious problems when using vasp_gam it's compiled differently as compared to the nsc1-intel-2018a build (-O2 -xCORE-AVX2).

VASP 'vanilla' 5.4.4 Patch 16052018

nsc1-intel-2018a: A special debug installation is available. VASP built with debugging information and lower optimizmation. Mainly intended for troubleshooting and running with a debugger. Do not use for regular calculations, e.g.:

If you see lots of error messages BRMIX: very serious problems this might provide a solution. OBS: This module doesn't actually include the latest patch 16052018.

VASP 5.4.4 Patch 16052018 with Wannier90 2.1.0

nsc1-intel-2018a: VASP built for use together with Wannier90 2.1.0. Load and launch e.g. with:

VASP 5.4.4 Patch 16052018 utility installation with VTST, VASPsol and BEEF

nsc2-intel-2018a: VASP built in the same way as the regular version, but including VTST 3.2 (vtstcode-176, vtstscripts-935), VASPsol and BEEF. For more information, check the respective links.

VASP 'vanilla' 5.4.4 Patch 16052018 utility installation with VTST, VASPsol and BEEF

nsc1-intel-2018a: A special debug installation is available.

VASP 5.4.4 Patch 16052018 with occupation matrix control modification

Sky force anniversary crack. Some problems which can be encountered running VASP are described at the end of this page.

How to run: quick start

A minimum batch script for running VASP looks like this:

This script allocates 4 compute nodes with 32 cores each, for a total of 128 cores (or MPI ranks) and runs VASP in parallel using MPI. Note that you should edit the jobname and the account number before submitting.

For best performance, use these settings in the INCAR file for regular DFT calculations:

For hybrid-DFT calculations, use:

POTCAR

PAW potential files, POTCARs, can be found here:

Read more on recommended PAW potentials.

Modules

NSC's VASP binaries have hard-linked paths to their run-time libraries, so you do not need to load a VASP module or set LD_LIBRARY_PATH and such for it work. We mainly provide modules for convenience, as you do not need to remember the full paths to the binaries. Though if you directly run the binary for non-vanilla installations with intel-2018a, you need to set in the job script (see details in section 'Problems' below):

This is not needed for other modules built with e.g. intel-2018b.

There is generally only one module of VASP per released version directly visible in the module system. It is typically the recommended standard installation of VASP without source code modifications. Load the VASP module corresponding to the version you want to use.

Then launch the desired VASP binary with 'mpprun':

Naming scheme and available installations

The VASP installations are found under /software/sse/manual/vasp/version/buildchain/nsc[1,2,..]/. Each nscX directory corresponds to a separate installation. Typically, the higher build with the latest buildchain is the preferred version. They may differ by the compiler flags used, the level of optimization applied, and the number of third-party patches and bug-fixes added on.

Each installation contains three different VASP binaries:

- vasp_std: 'normal' version for bulk system

- vasp_gam: gamma-point only (big supercells or clusters)

- vasp_ncl: for spin-orbit/non-collinear calculations

We recommended using either vasp_std or vasp_gam if possible, in order to decrease the memory usage and increase the numerical stability. Mathematically, the half and gamma versions should give identical results to the full versions, for applicable systems. Sometimes, you can see a disagreement due to the way floating point numerics works in a computer. In such cases, we would be more inclined to believe the gamma/half results, since they preserve symmetries to a higher degree.

Constrained structure relaxation

Binaries for constrained structure relaxation are available for some of the modules. The naming scheme is as follows, e.g.:

Vasp 5.4.4 Mac

- vasp_x_std: relaxation only in x-direction, yz-plane is fixed

- vasp_xy_std: relaxation only in xy-plane, z-direction is fixed

Makefile.include.linux_intel

Note: This naming scheme gives the relaxation direction.

vdW-kernel

The VASP binaries contain a hard-coded path to the vdW_kernel.bindat file located in the /software file system, so that you do not need to generate it from scratch.

VASP 6.1.2

The update 6.1.2 fixes a broken constrained magnetic moment approach in VASP6 (ok in VASP5). Also included (assumed from update 6.1.1) is fix for a bug which affected the non-local part of the forces in noncollinear magnetic calculations with NCORE not set to 1 (ok in VASP5). Shadow of mordor black captains list.

nsc1-intel-2018a: Compiled in the same way as for 6.1.0 (see below).

omp-nsc1-intel-2018a: Compiled for OpenMP threading, otherwise similar to the above installation. Examples on how to best use the OpenMP threading for efficiency will soon be added. In brief, it might e.g. be useful for increasing the efficiency when you have reduced the number of tasks (mpi ranks) per node in order to save memory.

Compare with the example job script from the top of the page, now setting 4 OpenMP threads. Note the reduced tasks per node (mpi ranks) such that 8 tasks x 4 OpenMP threads = 32 (cores/node). Also note the setting of OMP_NUM_THREADS and KMP_STACKSIZE

VASP 6.1.0

When testing VASP6, I needed to set export I_MPI_ADJUST_REDUCE=3 for stable calculations (done automatically at NSC by loading the corresponding VASP modules). Otherwise, calculations might slightly differ or behave differently (ca. 1 out of 10).

nsc1-intel-2018a: The first VASP6 module at NSC. A build with no modifications of the source code and using more conservative optimization options (-O2 -xCORE-AVX2). This build does not include OpenMP. All tests in the new officially provided testsuite passed.

VASP 5.4.4 Patch 16052018

nsc1-intel-2018a: After fixing in the module by automatically setting I_MPI_ADJUST_REDUCE=3 (see discussion in Modules) this is now again the recommended module. VASP built from the original source with minimal modifications using conservative optimization options (-O2 -xCORE-AVX512). The VASP binaries enforce conditional numerical reproducibility at the AVX-512 bit level in Intel's MKL library, which we believe improves numerical stability with no cost to performance.

nsc1-intel-2018b: Built with slightly newer intel compiler. However, it seems more sensitive for certain calculations, leading to crashes, which are avoided by using nsc1-intel-2018a.

nsc2-intel-2018a: Due to spurious problems when using vasp_gam it's compiled differently as compared to the nsc1-intel-2018a build (-O2 -xCORE-AVX2).

VASP 'vanilla' 5.4.4 Patch 16052018

nsc1-intel-2018a: A special debug installation is available. VASP built with debugging information and lower optimizmation. Mainly intended for troubleshooting and running with a debugger. Do not use for regular calculations, e.g.:

If you see lots of error messages BRMIX: very serious problems this might provide a solution. OBS: This module doesn't actually include the latest patch 16052018.

VASP 5.4.4 Patch 16052018 with Wannier90 2.1.0

nsc1-intel-2018a: VASP built for use together with Wannier90 2.1.0. Load and launch e.g. with:

VASP 5.4.4 Patch 16052018 utility installation with VTST, VASPsol and BEEF

nsc2-intel-2018a: VASP built in the same way as the regular version, but including VTST 3.2 (vtstcode-176, vtstscripts-935), VASPsol and BEEF. For more information, check the respective links.

VASP 'vanilla' 5.4.4 Patch 16052018 utility installation with VTST, VASPsol and BEEF

nsc1-intel-2018a: A special debug installation is available.

VASP 5.4.4 Patch 16052018 with occupation matrix control modification

nsc1-intel-2018a: Similar to the regular version vasp module nsc2-intel-2018a, but modified for occupation matrix control of d and f electrons. See this link and this other link for more information.

Performance and scaling

In general, you can expect about 2-3x faster VASP speed per compute node vs Triolith, provided that your calculation can scale up to using more cores. In many cases, they cannot, so we recommend that if you ran on X nodes on Triolith (#SBATCH -N X), use X/2 nodes on Tetralith, but change to NCORE=32. The new processors are about 1.0-1.5x faster on a per core basis, so you will still enjoy some speed-up even when using the same number of cores.

Selecting the right number of compute nodes

Initial benchmarking on Tetralith showed that the parallel scaling of VASP on Tetralith is equal to, or better, than Triolith. This means that while you can run calculations close to the parallel scaling limit of VASP (1 electronic band per CPU core) it is not recommended from an efficiency point of view. You can easily end up wasting 2-3 times more core hours than you need to. A rule of thumb is that 6-12 bands/core gives you 90% efficiency, whereas scaling all the way out to 2 bands/core will give you 50% efficiency. 1 band/core typically results in < 50% efficiency, so we recommend against it. If you use k-point parallelization, which we also recommend, you can potentially multiply the number of nodes by up the number of k-points (check NKPT in OUTCAR and set KPAR in INCAR). A good guess for how many compute nodes to allocate is therefore:

Example: suppose we want to do regular DFT molecular dynamics on a 250-atom metal cell with 16 valence electrons per atom. There will be at least 2000 + 250/2 = 2150 bands in VASP. Thus, this calculation can be run with up to (2150/32) = ca 67 Tetralith compute nodes, but it will be very inefficient. Instead, a suitable choice might be ca 10 bands per cores, or 2150/10 = 215 cores, which corresponds to around 6-7 compute nodes. To avoid prime numbers (7), we would likely run three test jobs with 6,8 and 12 Tetralith compute nodes to check the parallel scaling.

A more in depth explanation with example can be found in the blog post 'Selecting the right number of cores for a VASP calculation'.

What to expect in terms of performance

To show the capability of Tetralith, and provide some guidance in what kind of jobs that can be run and how long they would take, we have re-run the test battery used for profiling the Cray XC-40 'Beskow' machine in Stockholm (more info here). It consists of doped GaAs supercells of varying sizes with the number of k-points adjusted correspondingly.

Fig. 1: Parallel scaling on Tetralith of GaAs supercells with 64 atoms / 192 bands, 128 atoms / 384 bands, 256 atoms / 768 bands, and 512 atoms / 1536 bands. All calculations used k-point parallelization to the maximum extent possible, typically KPAR=NKPT. The measured time is the time taken to complete a complete SCF cycle.

The tests show that small DFT jobs (< 100 atoms) run very fast using k-point parallelization, even with a modest number of compute nodes. The time for one SCP cycle can often be less than 1 minute, or 60 geometry optimization steps per hour. In contrast, hybrid-DFT calculations (HSE06) takes ca 50x longer time to finish, regardless of how many nodes are thrown at the problem. They scale somewhat better, so typically you can use twice the number of nodes at the same efficiency, but it is not enough to make up the difference in run-time. This is something that you must budget for when planning the calculation.

As a practical example, let us calculate how many core hours that would be required to run 10,000 full SCF cycles (say 100 geometry optimizations, or a few molecular dynamics simulations). The number of nodes has been chosen so that the parallel efficiency is > 90%:

| Atoms | Bands | Nodes | Core hours |

| 64 | 192 | 5 | 8,000 |

| 128 | 384 | 12 | 39,000 |

| 256 | 768 | 18 | 130,000 |

| 512 | 1536 | 16 | 300,000 |

The same table for 10,000 SCF cycles of HSE06 calculations looks like:

| Atoms | Bands | Nodes | Core hours |

| 64 | 192 | 10 | 400,000 |

| 128 | 384 | 36 | 2,000,000 |

| 256 | 768 | 36 | 6,900,000 |

| 512 | 1536 | 24 | 13,000,000 |

For comparison, a typical large SNAC project might have an allocation of 100,000-1,000,000 core hours per month with several project members, while a smaller personal allocation might be 5,000-10,000 core hours/month. Thus, while it is technically possible to run very large VASP calculations quickly on Tetralith, careful planning of core hour usage is necessary, or you will exhaust your project allocation.

Another important difference vs Triolith is the improved memory capacity. Tetralith has 96 GB RAM memory node (or about 3 GB/core vs 2 GB/core on Triolith). This allows you to run larger calculations using less compute nodes, which is typically more efficient. In the example above, the 512-atom GaAsBi supercell with HSE06 was not really possible to run efficiently on Triolith due to limited memory.

Compiling VASP from source

Finally, some notes and observations on compiling VASP on Tetralith. You can find makefiles in the VASP installation directories under /software/sse/manual/vasp/.

- Intel's Parallel Studio 2018 Update 1 compilers and MKL appears to work well. Use the

buildenv-intel/2018a-ebmodule on Tetralith to compile. You can use it even if you compile by hand and not use EasyBuild. - VASP's default makefile.include can be used, but we strongly recommend to compile with at least

-xCORE-AVX2or-xCORE-AVX512optimization flags for better performance on modern hardware. - Do not compile with

-O3using Intel's 2018 compiler, stay with-O2. When we tested, it is not faster, but it produces binaries which have random, but repeatable, convergence problems (1 out of 100 calculations or so). - VASP still cannot be compiled in parallel, but you can always try running

make -j4 stdor similar repeatedly. - VASP compiled with gcc, OpenBLAS and OpenMPI runs slower on Tetralith in our tests.

- Compiling with

-xCORE-AVX512and letting Intel's MKL library use AVX-512 instructions (which it does by default) seems to help in most cases, ca 5-10% better performance. We have seen cases where VASP runs faster with AVX2 only, so it is worth trying. It might be dependent on a combination of Intel Turbo Boost frequencies and NSIM, but remains to be investigated. If you want to try, you can compile with-xCORE-AVX2, and then set the environment variableMKL_ENABLE_INSTRUCTIONS=AVX2to force AVX2 only. This should make the CPU cores clock a little bit higher at the expense of less FLOPS/cycle. - We have turned on conditional bitwise reproducibility at the AVX512 level in the centrally installed binaries on Tetralith. If you compile yourself, you can also enable this at run-time using the

MKL_CBWR=AVX512environment variable. This is more of an old habit, as we haven't seen any explicit problems with reproducibility so far, but it does not hurt performance. The fluctuations are typically in the 15th decimal or so. Please note thatMKL_CBWR=AVXor similar, severely impacts performance (-20%). - Our local VASP test suite passes for version 5.4.4 Patch #1 with the same deviations as previously seen on Triolith.

Problems

BRMIX: very serious problems the old and the new charge density differ

If you encountered the problem BRMIX: very serious problems the old and the new charge density differ written in the slurm output, with VASP calculations on Tetralith / Sigma for cases which typically work on other clusters and worked on Triolith / Gamma, it might be related to bug/s which was traced back to MPI_REDUCE calls. These problems were transient, meaning that out of several identical jobs, some go through, while others fail. Our VASP modules are now updated to use another algorithm by setting I_MPI_ADJUST_REDUCE=3, which shouldn't affect the performance. If you don't load modules, but run binaries directly, set in the job script:

Vasp Benchmark

More details for the interested: the problem was further traced down to our setting of the NB blocking factor for distribution of matrices to NB=32 in scala.F. The VASP default of NB=16 seems to work fine, while NB=96 also worked fine on Triolith. By switching off ScaLAPACK in INCAR, LSCALAPACK = .FALSE. it also works. Furthermore, the problem didn't appear for gcc + OpenBLAS + OpenMPI builds.

Newer VASP modules built with e.g. intel-2018b use VASP default NB=16, while the non-vanilla modules built with intel-2018a has NB=32 set.

internal error in SETUP_DEG_CLUSTERS: NB_TOT exceeds NMAX_DEG

If you find this problem internal error in SETUP_DEG_CLUSTERS: NB_TOT exceeds NMAX_DEG typically encountered for phonon calculations, you can try the specially compiled versions with higher values of NMAX_DEG, e.g.: